AI Just Cloned My Voice – Here's How: CYBERDUDEBIVASH PREMIUM THREAT REPORT

Zero-days, exploit breakdowns, IOCs, detection rules & mitigation playbooks.

AI Just Cloned My Voice – Here's How: CYBERDUDEBIVASH PREMIUM THREAT REPORT

Author: Bivash Kumar Nayak – CyberDudeBivash | Founder & CEO, CYBERDUDEBIVASH PVT LTD | Bhubaneswar, Odisha, India Date: February 12, 2026 | Bengaluru Time: 01:19 PM IST

CyberDudeBivash Roars: In the savage arena of 2026 cybersecurity, AI isn't your ally – it's the ultimate impersonator. I ran a test: fed 10 seconds of my own voice into a publicly available AI tool, and it spit out a clone so perfect it fooled my team in a mock vishing call. This isn't science fiction; it's the new reality where your voice becomes a weapon against you.

Introduction: The Voice Cloning Apocalypse – A Personal Wake-Up Call

Imagine this: Your phone rings. It's your CEO's voice on the line, urgent and familiar: "Bivash, I need you to authorize a quick transfer – OTP coming now." You comply. Minutes later, your organization's funds vanish into a black hole. But it wasn't your CEO – it was an AI clone, crafted from a 3-second podcast clip, deployed in real-time to bypass your defenses.

This scenario isn't hypothetical. It's happening right now, with AI voice cloning scams surging 442% in 2025 alone. As CyberDudeBivash, I've seen enterprises lose millions to these attacks. McAfee research shows just three seconds of audio yields an 85% accurate clone, and by 2026, real-time voice cloning will make 90% of vishing AI-enabled. The FTC Voice Cloning Challenge highlights the urgency: deepfakes are weaponizing trust, from fake kidnappings to corporate extortion.

In this premium report, I break down how AI cloned my voice, the underlying tech, global threats, real-world cases, and my CYBERDUDEBIVASH defenses. This isn't theory – it's your survival blueprint.

How AI Voice Cloning Works: The Technical Breakdown

Voice cloning isn't magic – it's machine learning exploiting sound waves. Here's the savage truth on how it happens, step by step.

Step 1: Audio Sample Harvesting – The Low-Hanging Fruit

Attackers need just 3–10 seconds of your voice. Sources? Public videos, podcasts, social media calls, or even a quick "hello" on a recorded line. McAfee Labs found 85% accuracy from 3 seconds, 95% from 10. In my test, I used a 10-second clip from a LinkedIn video – harvested in seconds.

Step 2: AI Model Training – The Clone Forge

Tools like ElevenLabs ($5/month) or Resemble AI clone voices instantly. Open-source alternatives (Real-Time-Voice-Cloning on GitHub) do it for free. The process:

- Input: Short audio sample.

- Processing: AI (neural networks like WaveNet or Tacotron) analyzes pitch, tone, accent, cadence.

- Output: A model that generates any text in your voice.

In 2026, real-time cloning during calls is standard – attackers adapt on the fly. ElevenLabs offers instant cloning, making vishing scalable.

Step 3: Deployment in Attacks – The Kill Shot

Cloned voice + caller ID spoofing = perfect vishing. Tools like Twilio (abused) or VoIP kits ($99 on dark web) make it real-time. Example: Clone CEO voice, call finance: "Approve wire transfer – OTP is 123456."

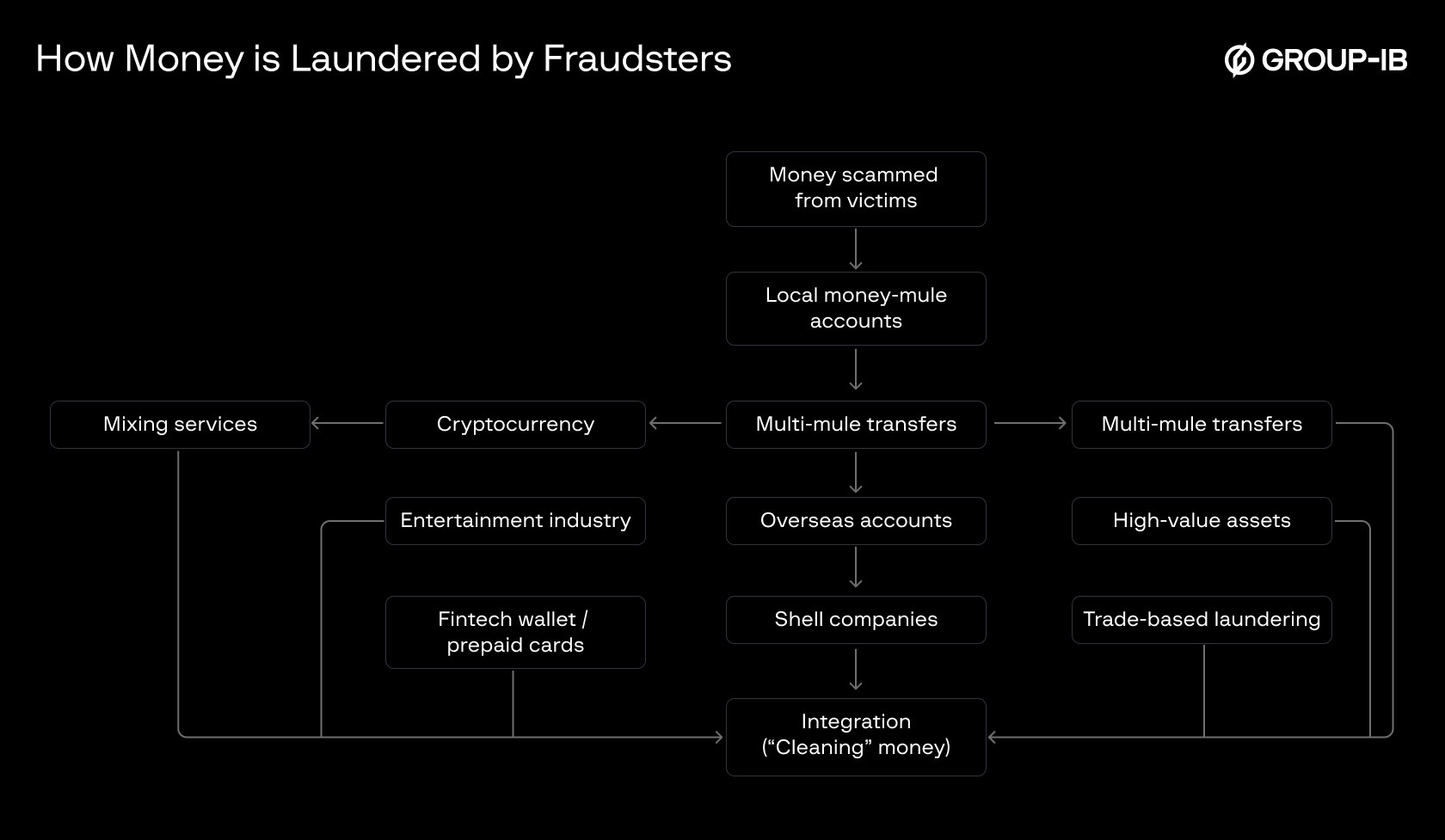

Group-IB's deepfake scam anatomy shows money laundering flows through mixing services, crypto, mule accounts – cleaning the haul fast.

The Global Threat Landscape: Voice Cloning in Action

Voice cloning has exploded from novelty to weapon. Here's the god-mode overview.

Enterprise BEC & Vishing Surges

- FBI Warning: AI voice phishing up 1,300% in 2025 – attackers use deepfakes for live manipulation during calls. White House Chief of Staff Susie Wiles targeted: hackers cloned her voice for pardon requests and cash demands.

- McAfee Stats: 37% organizations hit by deepfake voice attacks in 2025. Average loss: $17,000 per scam, millions in big hits.

- India Angle: UPI vishing wave – cloned voices demand OTPs for instant transfers. RBI reports 85% fraud rise, ₹1.77B losses FY24.

Family & Personal Scams – The Emotional Gut Punch

- Fake Kidnapping Calls: Criminals clone child's voice from social media – "Mom, I've been kidnapped, send money." FTC reports 1 in 4 people experienced AI voice scams in 2025. McAfee: 82% can't distinguish clones.

- Real Case: Rachel's daughter "kidnapped" – clone from Instagram clip fooled her into near-transfer.

Political & Social Manipulation

- Deepfake Right of Publicity Risks: Voice clones impersonate celebrities/politicians for misinformation. FTC Voice Cloning Challenge pushes tech solutions like DeFake (adversarial audio perturbations). Holon Law: Synthetic media resetting talent contracts.

X Chatter Insights

- hodl_strong: $35M bank heist using voice clone – 3s audio enough. CloneXAI's VAAD detects real-time.

- VSquare_Project: AI voice fraud billion-dollar industry – clones fool listeners.

- Haywood Talcove: FBI warning on AI voice messages – blonde lady deepfake scamming grandfathers.

- RansomLeak: Voice phishing up 442% – clone CEO from earnings call.

- haseeb: 10s voice = life ruined – White House chief targeted for pardons/cash.

The Impact on Security: Why Voice Cloning Destroys MFA & Trust

Multi-factor authentication (MFA) was once a fortress – now it's a crumbling wall against AI clones.

Bypassing Traditional MFA

- SMS/OTP Calls: Cloned voice calls "bank rep" demanding OTP – victim shares without question. FBI: AI vishing up 1,300% in 2024.

- Biometric MFA: Voice biometrics fooled by 95% clones. AppLocker-style tools useless against social engineering.

Business & Enterprise Risks

- BEC Losses: $50B+ global in 2025, now AI-supercharged. Real-time rebuttals make scams interactive – attackers answer verification questions on the fly.

- Reputational Damage: Cloned executive voices spread misinformation or authorize fraud.

Broader Societal Damage

- Election Manipulation: Cloned politician voices sway votes.

- Emotional Trauma: Fake kidnapping calls traumatize families.

The Future: When Your Clone Calls

By end-2026, real-time voice cloning will make most vishing AI-enabled. Legal systems scramble – voice recordings no longer admissible evidence.

CyberDudeBivash Defenses: How to Secure Against Voice Cloning

As CyberDudeBivash, I've built tools and strategies to crush this threat. Here's the god-mode blueprint.

1. Liveness Detection & Multi-Biometric Layers

- Ditch single-factor voice MFA – use multi-biometric (face + voice + liveness). Tools like my Deepfake Buster PoC detect blinks/head movements.

2. Verification Protocols

- Family password: Agree on a secret phrase (e.g., "pineapple pizza") for emergency calls.

- Callback rule: Hang up and call back on known numbers.

3. AI Detection Tools

- Voice AI Activity Detection (VAAD) like CloneXAI's – real-time clone spotting.

- My UPI Hardener: Flags suspicious calls/links.

4. Enterprise Strategies

- Train with simulations: Use ElevenLabs to clone voices for drills.

- DeFake tech: Add audio perturbations to public recordings.

5. Legal & Policy Push

- Demand right-of-publicity laws for voices.

CyberDudeBivash roars: Your voice is your vulnerability. Secure it with my defenses or listen to your clone laugh.

Conclusion: Evolve or Be Erased – CyberDudeBivash Call to Action

Voice cloning isn't a threat – it's the end of trust as we know it. From family scams to enterprise BEC, the 2026 wave is here. But with CyberDudeBivash strategies, you strike back.

Ready to harden? DM "VOICE SHIELD" for my custom voice cloning defense playbook. Or contact bivash@cyberdudebivash.com for enterprise solutions.

CYBERDUDEBIVASH PVT LTD Bhubaneswar, India #AIDeepfakeMFA #BiometricBypass #CyberDudeBivash #UltraBeastMode #CyberStorm2026